Team members

Destiny Bailey, Zahra Ebrahimi Jouibari, Mira Patel, Sevy Veeken

Project summary

SightMap.ai is a comprehensive AI assistant tool to aid visually impaired individuals understanding and navigating their surroundings.

Keywords

Computer Vision, Object Detection, Depth Estimation, Clear Path Navigation

Inspiration

Our vision plays a pivotal role in interpreting and navigating the world around us. However, this fundamental sense isn't accessible to everyone. According to the World Health Organization, more than 2.5 billion people globally would benefit from assistive products to enhance their interactions with their environments. Vision impairment varies greatly across regions, often dependent on the accessibility, affordability, and quality of eye care services and education.

Acknowledging this, the WHO & UNICEF Global Report on Assistive Technology (2022) has provided a solid framework for bridging these gaps. At the core of these recommendations lies the need for safe, effective, and affordable assistive technologies—a mission that SightMap.ai takes to heart. We are aware of the importance of not only serving individuals but also involving them and their families throughout the development and deployment process of solutions.

We consulted with an expert from the Canadian National Institute for the Blind (CNIB), a SmartLife Head Coach and Public Education Coordinator. Our discussions with them shed light on the significant challenges that current assistive technologies present. One of the standout insights was the financial barrier many visually impaired individuals face, often compounded by high poverty rates within the community. As it stands, few provinces offer sufficient funding for these essential technologies. Additionally, one of the primary navigational obstacles highlighted was the last five meters of a journey, a critical point where many existing aids fall short. Crucially, any assistive device must be easy to use and discreet, ensuring that users can navigate confidently without drawing unnecessary attention.

List of technologies

- Google Colab

- GitHub

- OpenCV

- Python

- PyTorch

- Matplotlib

- NumPy

- Pillow

- MIDAS

- Ultralytics YOLO

Project development

SightMap is a tool designed to assist visually impaired users by analyzing their environment through video. It identifies objects in the environment, determines their distance from the user, and then generates an audio guide to help them navigate safely by avoiding obstacles.

To begin, we consulted with a SmartLife Head Coach and Public Education Coordinator at the Canadian National Institute for the Blind (CNIB) to better understand the challenges faced by the blind and low-vision community with existing assistive technologies. One of the most significant challenges we identified was the difficulty in navigating the last five meters of a journey. This insight led us to develop a computer vision-based solution centered around our innovative clear path navigation algorithm.

Our approach starts with using a pretrained YOLO model, which is trained on diverse datasets like COCO (Common Objects in Context) and VOC (Pascal Visual Object Classes). COCO is a large-scale object detection, segmentation, and captioning dataset with 80 object categories, while VOC is a dataset for object detection and segmentation with 20 object classes and over 11,000 images.

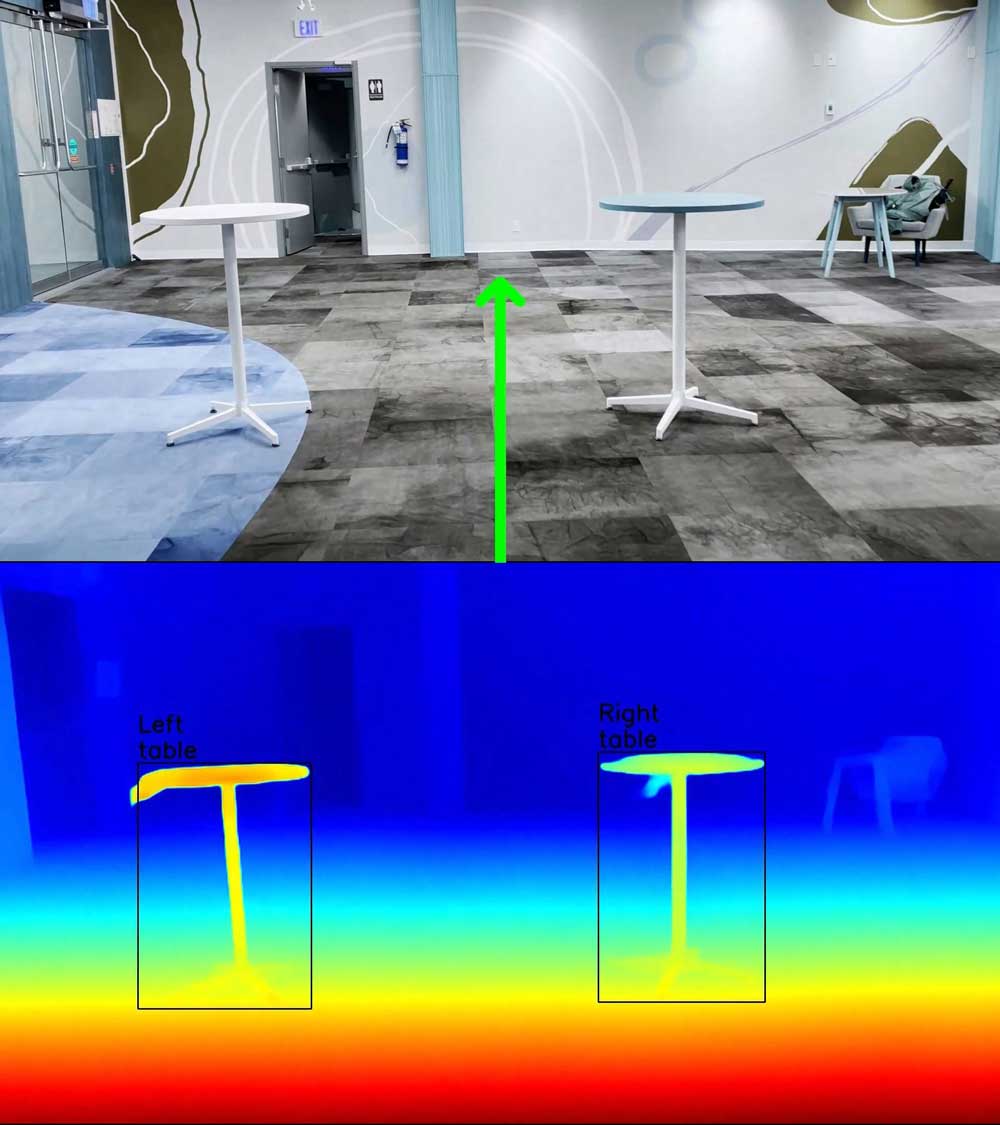

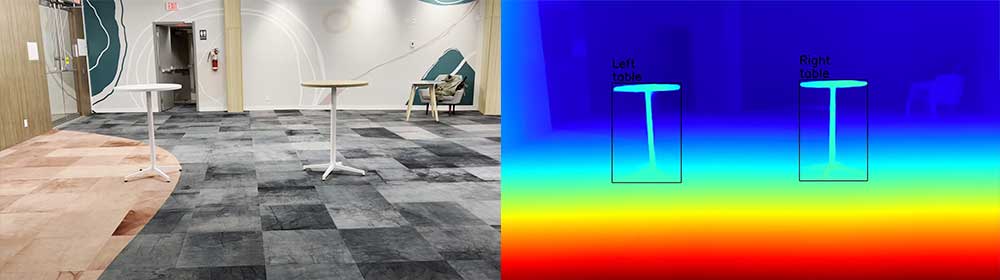

Once objects are identified in the scene, we need to calculate their relevant distances to the user. To achieve this, we experimented with various depth map models and found that MIDAS provided the highest accuracy. MIDAS is trained on several datasets, including the KITTI dataset, which is widely used for autonomous driving and computer vision tasks.

With the object detection and depth information in hand, we combined these elements into our clear path navigation algorithm. The algorithm works by first detecting objects in the scene and then ordering them by distance using the depth map, from closest to furthest. The scene is divided into three parts—left, right, and middle—based on predefined thresholds. If two of these areas contain objects, the algorithm suggests the direction without any obstacles. If all three areas have objects, the algorithm chooses the path where the objects are furthest from the user, reducing the likelihood of encountering obstacles.

Finally, the suggested path is converted into text, and we use the OpenAI API to generate an audio guide that is provided to the user.

Impact and Innovation

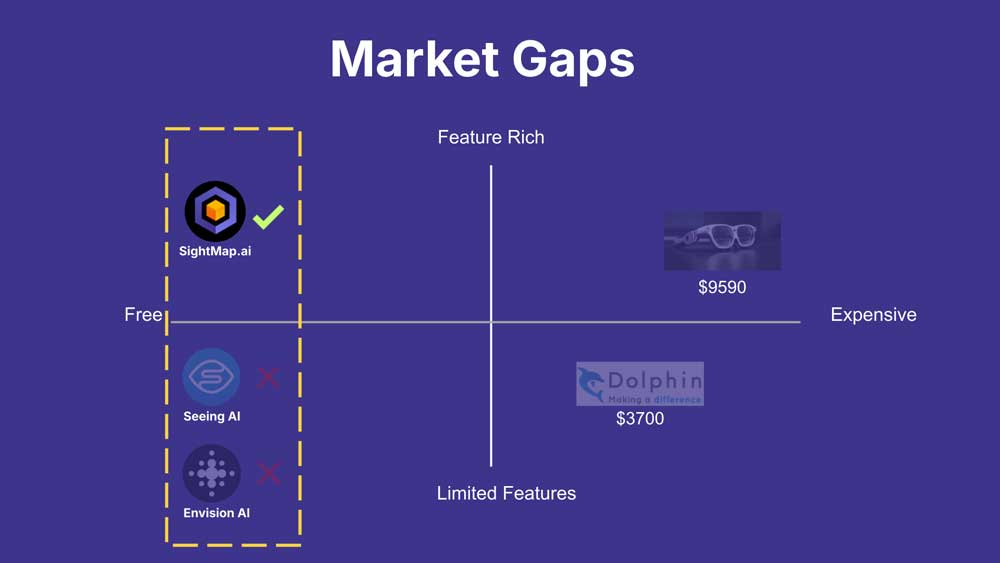

One of the most notable advances SightMap.ai offers over existing solutions is its ability to provide real-time, comprehensive spatial awareness. Unlike current market solutions that are either prohibitively expensive or offer limited functionality, SightMap.ai bridges these gaps effectively and affordably. By incorporating advanced object detection at varying distances and prioritizing objects based on proximity, SightMap.ai offers essential information that aids in immediate decision-making, thereby significantly reducing instances of collisions and enhancing overall navigation.

Free solutions, such as the SeeingAI and EnvisionAI apps, often fall short by offering sporadic object identification without considering the spatial context or relevance of those objects, leading to a lack of understanding of the environment. SightMap.ai solves this issue by using computer vision algorithms to not only identify objects and obstacles but also assess their spatial relationship to the user, offering an intuitive understanding of one’s environment. This feature is critical for informing users of obstacles that are closest to them first, empowering them to navigate their surroundings aligned with real-world priorities.

Another key innovation of SightMap.ai is its seamless integration of video input for processing, a feature noticeably absent in many existing products. This capability allows for continuous feedback, eliminating the need for users to pause their movement to capture images, a common hindrance with existing tools. This ensures fluid navigation and aligns with natural human movement, making the experience less disruptive and more in line with the way sighted individuals interact with their surroundings.

Furthermore, while many current technologies, such as the OrCam MyEye or Eyedaptic glasses, come with daunting price tags, SightMap.ai makes this technology more accessible. By addressing cost barriers, SightMap.ai brings critical navigational assistance within the reach of a broader audience, ensuring that more individuals can lead independent and safer lives without the added stress of financial burden.

Challenges

The development of SightMap was a challenging process, as it was more than just simple model training—it involved creating a full pipeline with various modules that needed to work seamlessly together.

Our initial challenge was that not all YOLO labels were relevant to our needs, so we had to identify the most important objects common to both indoor and outdoor settings. Our goal was to create a consistent model that performs well in both environments.

Additionally, since the images are captured from a moving camera, the visibility of objects changes, affecting YOLO’s accuracy. For example, when approaching a trash can, YOLO can accurately identify it, as we included a “Trash Can” category. However, as one gets closer and only the lid becomes visible, the object might no longer be recognized. To address this, we expanded the categories to enhance YOLO’s accuracy and minimize the risk of collision.

For the next module, which calculates the distance of objects, we used a depth map. A depth map provides a grayscale pixel representation of objects based on their distance from the camera. However, this format does not fully capture the overall representation of an object. To overcome this, our research concluded that converting the pixel data into a meaningful measure could more effectively represent the object’s distance.

The subsequent challenge involved determining the direction of an object relative to the camera’s position. Images, being composed of pixels, do not inherently indicate the direction in which an object is located. To resolve this issue, we calculated the centroid of each object and, based on its position within the image frame, determined whether the object is situated in the middle, to the right, or to the left of the user.

Beyond these technical challenges, we placed a strong emphasis on the ethical aspects of SightMap, particularly since it operates with images. From the outset, we designed the pipeline to ensure that it does not store any images, ensuring that our system operates in real-time while safeguarding ethical concerns.

What we learned & accomplishments we’re proud of

During the AI4Good Lab, we learned about utilizing AI for social good, including ethical AI development, stakeholder engagement, and the importance of interdisciplinary collaboration. The program emphasized the significance of understanding the societal context and considerations when developing AI technologies. Additionally, the lectures, TA sessions, workshops, weekly group projects and networking opportunities equipped us with foundational technical skills to develop our own project that leveraged machine learning for social good. This foundation helped us structure our project with a focus on user needs, ethical considerations, and innovation, which were essential during the development of our project.

Throughout the development of SightMap, we learned to navigate the complexities of integrating technical capabilities with real-world constraints. We worked on our skills in problem solving, prototyping, and teamwork, working together to improve our project based on feedback and evolving requirements. This approach allowed us to refine our solution effectively, improving performance and usability.

What’s next for us/the project

As one of the Accelerator Awards winners, our team could take part in Amii’s mentorship for startups. We learned about the business aspect of projects, and were able to critically think about the scalability of our product. Given the current timeframe for the project and our intention to develop a free product, we did not believe that the start up model was the right fit for our project. We believe it more closely aligns with a non-profit product, however we would require additional time and funding to continue to develop our project. This would be a process of iterative development that we would implement after carrying out user testing with existing organizations we had established connections with. Unfortunately, our team members will be pursuing our studies and have made the decision together to not proceed with the app. Regardless, we will carry forward the learnings and experiences we have learnt from during our time in this lab and working together on this project!

Acknowledgements & References

Acknowledgements:

We would like to express our sincere gratitude to our TA, Jake Tuero, and our mentor, Ankit Anand, for their invaluable support. We also extend our thanks to Doina Precup and Angelique Mannella, the creators of AI4Good Lab, for their vision and dedication to leveraging AI for social good. We are also grateful to Yorsa Kazemi, Jennifer Addison, and everyone who supported this program.

Additionally, we offer heartfelt thanks to CIFAR, Google DeepMind, Mila — Quebec Artificial Intelligence Institute, Alberta Machine Intelligence Institute (Amii), Vector Institute, the Design Fabrication Zone at Toronto Metropolitan University, and Manulife for making this opportunity possible.

References:

Canadian National Institute for the Blind: https://www.cnib.ca/en?region=on

Everingham, Mark, et al. "The pascal visual object classes (voc) challenge." International journal of computer vision 88 (2010): 303-338.

Lin, Tsung-Yi, et al. "Microsoft coco: Common objects in context." Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6-12, 2014, Proceedings, Part V 13. Springer International Publishing, 2014.

Geiger, Andreas, et al. "Vision meets robotics: The kitti dataset." The International Journal of Robotics Research 32.11 (2013): 1231-1237.

Redmon, Joseph, et al. "You only look once: Unified, real-time object detection." Proceedings of the IEEE conference on computer vision and pattern recognition. 2016.

Ranftl, René, et al. "Towards robust monocular depth estimation: Mixing datasets for zero-shot cross-dataset transfer." IEEE transactions on pattern analysis and machine intelligence 44.3 (2020): 1623-1637.

World Health Organization & UNICEF: https://www.who.int/publications/i/item/9789240049451